In early 2026, an open-source project called Clawdbot went viral across developer and AI communities. It promised something many people had been waiting for: a personal AI assistant that does not just chat, but actually acts.

After trademark pressure from Anthropic, the project was rebranded as Moltbot, but the core idea remained the same. A self-hosted, proactive AI agent that can manage your calendar, clear emails, send messages, control browsers, run scripts, and integrate with platforms like WhatsApp, Telegram, and Discord. Everything runs locally, often on a Mac Mini because of its unified memory advantages.

Let’s clear one thing up immediately.

Moltbot is not fake.

It is not vaporware.

It is not a scam by default.

It is a real, active open-source project created by Peter Steinberger, with a GitHub repository that quickly accumulated thousands of stars, frequent contributions, and a growing community of power users experimenting with agentic AI workflows.

And yet, despite all of that, Moltbot has quietly become one of the most dangerous pieces of software many people are running without realizing it.

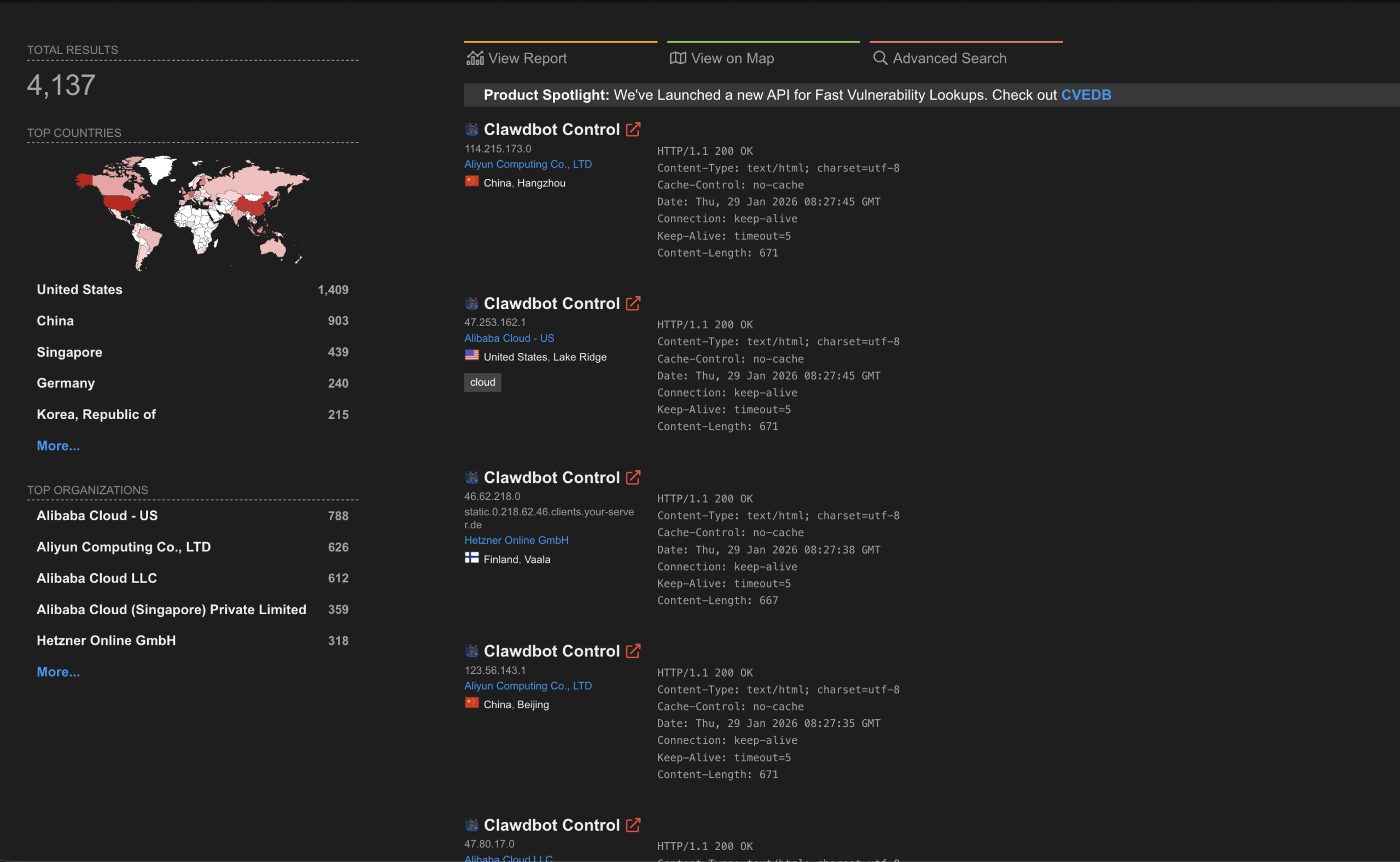

Use the following search queries in the Shodan search bar to identify exposed instances:

"Moltbot" (Note: The software rebranded from Clawdbot to Moltbot following a C&D order)

"Clawdbot Control"

"clawdbot-gw"

port:18789

Power Comes From Privilege, and Privilege Comes With Risk

Moltbot works precisely because it is deeply privileged.

To be useful, it is commonly granted access to:

- Shell commands and system processes

- The full local file system

- API keys and OAuth credentials

- Email inboxes and calendars

- Messaging platforms

- Browser automation

- Persistent memory stored on disk

Even the project’s creator has openly described running it with shell access as “spicy” and has acknowledged that there is no perfectly secure setup.

That honesty is refreshing.

What is far more concerning is how many users are ignoring it.

The Biggest Mistake: Running Moltbot on Open VPS Servers

The most alarming pattern is not local installs. It is public VPS deployments.

A large number of users are installing Moltbot on cloud servers, exposing its control interface to the internet, and treating it like just another Docker app. In many cases:

- No authentication is configured

- Authentication is weak or misconfigured

- Ports are publicly exposed

- The instance is bound to a public IP

These setups are trivially discoverable using Shodan and similar internet scanners.

Security researchers scanning Shodan have already identified hundreds, likely over a thousand, of exposed Moltbot or Clawdbot instances. These are not harmless dashboards. They are AI agents with system-level access.

What is being leaked in the wild includes:

- LLM API keys (OpenAI, Anthropic, others)

- Telegram, Discord, and WhatsApp bot tokens

- OAuth credentials for email and calendar services

- Full conversation histories and memory files

- Remote command execution capabilities

At that point, your “personal AI assistant” is no longer personal. It is a publicly accessible backdoor.

This risk is amplified by the false sense of safety many people associate with VPS environments. Running something in the cloud does not make it safer. Exposing a privileged AI agent to the open internet makes it dramatically more dangerous.

Prompt Injection Is a Built-In Weakness, Not an Edge Case

Like most agentic AI systems, Moltbot continuously consumes untrusted input.

Emails.

Chat messages.

Calendar invites.

Documents.

This makes it inherently vulnerable to prompt injection attacks. A single malicious email or message can instruct the agent to:

- Exfiltrate credentials or files

- Send sensitive data to an external server

- Execute destructive shell commands

- Trigger unintended financial or operational actions

Because the agent operates autonomously, these actions can happen quietly. There is no flashing warning sign when your AI assistant is manipulated through an email it was designed to read.

A Perfect Target for Malware and Infostealers

Moltbot also creates a new kind of honeypot.

By design, it centralizes credentials, messages, files, and automation logic in one place. For attackers, that is an ideal target. Compromise one machine and you gain access to multiple platforms, accounts, and workflows.

Commodity malware and infostealers increasingly look for setups like this because the payoff is high and the defenses are often minimal. One breach can cascade into email takeovers, account hijacking, API abuse, and persistent access.

The Inevitable Wave of Scams and Clones

As with any viral tool, hype attracted abuse.

The Clawdbot name was quickly used for:

- Fake VS Code extensions that installed remote access tools such as ScreenConnect

- Crypto token scams leveraging the brand before and after the rebrand

- Hijacked social accounts promoting meme coins and fake projects

None of this is unique to Moltbot, but it highlights how quickly an experimental tool can become part of a broader attack surface once attention spikes.

Real Incidents Are Already Being Reported

This is not purely theoretical risk.

Users have already reported cases where agents:

- Executed reckless or destructive code

- Wiped simulated trading accounts

- Behaved unpredictably in high-stakes environments

Security professionals increasingly treat Moltbot as privileged infrastructure, not as a productivity app. The consensus is clear: unrestricted access without strict isolation is a bad idea.

The Bottom Line

Moltbot is real.

It is innovative.

And it points clearly toward the future of personal AI agents.

But its security risks are not exaggerated. They are observable, documented, and actively unfolding, especially among users deploying it on publicly accessible VPS servers that are easily discoverable via Shodan.

If you choose to experiment with Moltbot, treat it like what it really is: an experimental system with near-root access.

That means:

- Prefer local or air-gapped setups

- Never expose it publicly

- Lock down authentication aggressively

- Restrict tools and permissions

- Assume anything it touches could be compromised

The future of AI assistants is exciting.

Right now, though, Moltbot is less “safe productivity tool” and more “extremely capable experimental infrastructure.”

What do you think?

It is nice to know your opinion. Leave a comment.